SonarQube dropped native support for HTTPS, so you need to stand it up behind a reverse proxy to serve up SSL. This same procedure can be used to secure anything behind SSL like Jenkins, Confluence, Jira, etc. The other cool thing with this approach is that you can gain higher density in low volume environments by running multiple containers on one host. For example, I access my home instances with the following URLs:

They are all containers running on a single host, reverse proxied by NGINX. This allows me to not have to remember what port a given app is running on and is much cleaner.

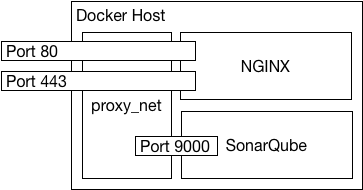

So, today I am going to show how to run SonarQube in a docker container and expose it to the outside world through NGINX running in another container.

For this implementation, I will be adding config file along with the certificate and key file in the NGINX image. For the SonarQube image we will be setting enviroment variables. Therefore, we need the following 5 files available on the Docker host:

- sonarqube.env – This is a key value pair file for the environment variables.

- sonarqube.crt – This is the fullchain SSL certificate.

- sonarqube.key – This is the private key.

- default.conf – This is the default site configuration file for NGINX.

- docker-compose.yaml – This is the docker-compose file to bring everything up easy.

The sonarqube.env looks sort of like this:

SONARQUBE_JDBC_USERNAME=sonarqube

SONARQUBE_JDBC_PASSWORD=notmypassword

SONARQUBE_JDBC_URL=jdbc:postgresql://postgres.madridcentral.net/sonarqube

The NGINX default.conf file is a straight forward reverse proxy config. We redirect port 80 to 443 for HTTPS and proxy_pass to the container name and the appropriate 9000 port.

server {

listen 80;

listen [::]:80;

server_name sonarqube.madridcentral.net;

return 301 https://$server_name$request_uri;

}

server {

listen 443 ssl;

listen [::]:443 ssl;

server_name sonarqube.madridcentral.net;

ssl_certificate /etc/ssl/certs/sonarqube.crt;

ssl_certificate_key /etc/ssl/private/sonarqube.key;

access_log /var/log/nginx/sonarqube.access.log;

location / {

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-SSL on;

proxy_set_header X-Forwarded-Host $host;

proxy_pass http://sonarqube_container:9000;

proxy_redirect off;

}

}

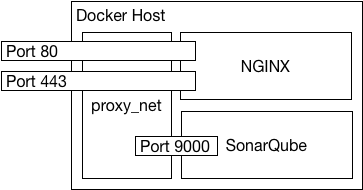

For the docker-compose file, we require version 3.5 of the format for setting the user defined network name. I am setting it to proxy_net in this example. Docker has deprecated links so this is the preferred way to get containers to communicate and resolve each other using their container names.

version: "3.5"

services:

sonarqube_container:

container_name: sonarqube_container

image: sonarqube

networks:

- proxy_net

restart: always

expose:

- "9000"

env_file:

- sonarqube.env

reverse_proxy:

container_name: reverse_proxy

depends_on:

- sonarqube_container

image: nginx

networks:

- proxy_net

ports:

- 80:80

- 443:443

restart: always

volumes:

- /etc/madridcentral/default.conf:/etc/nginx/conf.d/default.conf

- /etc/madridcentral/sonarqube.crt:/etc/ssl/certs/sonarqube.crt

- /etc/madridcentral/sonarqube.key:/etc/ssl/private/sonarqube.key

networks:

proxy_net:

name: proxy_net

Now we can use the following command to bring it all up:

docker-compose up -d

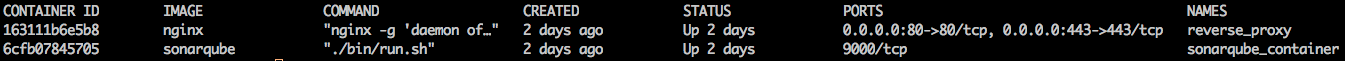

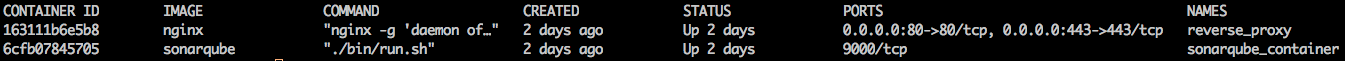

We should see something similar to the following: